Integrating Real and Simulated Data in Dyna-Q Algorithm

Dyna-Q is a conceptual algorithm that illustrates how real and simulated experience can be combined in building a policy. Planning in RL terminology refers to using simulated experience generated by a model to find or improve a policy for interacting with a modeled environment ( model-based )¹.

Learning refers to using real interactions with the environment to build a policy ( model-free )².

In both cases experience ( real or simulated ) is used to search for the optimal policy through state-space based on updates ( backups ) of value functions to the initial state values.

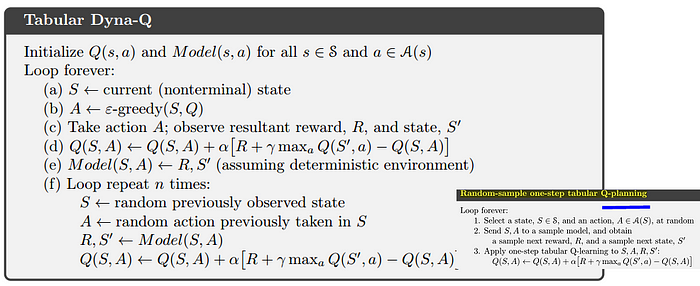

Dyna-Q algorithm integrates both direct RL and model learning, where planning is one-step tabular Q-planning, and learning is one-step tabular Q-learning ( Q — action value method — is used in both cases ). Real or simulated experience improves both the model via model learning and value function/policy via direct RL and planning. Model contains state, action, next state and reward tuples. Thus the model can be both improved and queried to get to the next state in planning part.

One of the main objections to RL is the need for many samples/interactions with the real environment. This is a problem in cases where interactions are naturally sparse or costly ( securities trading, self-driving cars ). Dyna-Q is a conceptual example of an RL algorithm that can combine both real and simulated experience³.

Here is Dyna-Q algorithm in pseudo-code — step (f) is planning stage, step (d) is direct RL and step (e) is model learning/update:

Sutton’s book has Dyna Maze example where agent has to find the most efficient policy to get from S — start to G-goal state ( grid position; gray states are obstacles):

There is an excellent github repository maintained by Shangtong Zhang, which implements all main algorithms from Sutton’s book. Dyna Maze example is coded to demonstrate major Dyna-Q themes. Below is comparative output for an online agent that performs 0 and 50 planning steps:

It is clearly evident that number of steps per episode needed to reach goal state decreases with an increase in the number of planning steps. I changed ε — exploration probability from 0.1 to 0.4 to get the program to converge faster. Exploring more options either via more epsilon-greedy actions or increased planning obviously leads to better results/faster convergence.

State, action, next state and reward is preserved between runs in the model, which means that multiple runs incrementally increase policy optimality.

¹ Sutton, Barto ( 2020 ). Reinforcement Learning, An Introduction 2nd ed.

² The intent of concepts like Dyna-Q is to bring closer together initially separated planning and learning ( for didactic purposes ).

Learning could also refer to learning a model i.e. it is not strictly referring to model-free.

³ Simulated experience is cheaper and of lower quality.